The Most Mindblowing Realization About ChatGPT

My ongoing exploration into the inner workings of ChatGPT has brought me to a crucial understanding that most people don’t realize:

ChatGPT’s implementation is shockingly…mind-blowingly… simple.

It joins other such mysterious phenomena of “unimaginable complexity emergent from incredible simplicity” that the human mind struggles to comprehend… like how a short DNA sequence that could fit on a CD encodes a complete human.

When folks hear stats like “terabytes of training data” and “175 billion parameters” they think “wow – this thing is super complicated!”. That impression is completely wrong. The “engine” that is ChatGPT is so incredibly simple, even I struggle to believe that it produces the output that it does.

Seeing ChatGPT in-action helps us overcome our innate biases that limit our understanding of how memory works (and how the human brain works in general.)

I’ll explain.

Understanding ChatGPT’s Inner Layout

ChatGPT is surprisingly willing to share the details of her architecture. In my prior “interviews” with her, I’ve collected all the data necessary to calculate her layout and complexity below. Another interview (here) verified a few assumptions that I had made.

To understand ChatGPT’s inner workings follow these steps:

1. Imagine 2 million dots.

Actually, you don’t have to imagine them. It’s the number of pixels in a 2 megapixel photo (your iPhone defaults to 12 megapixels.)

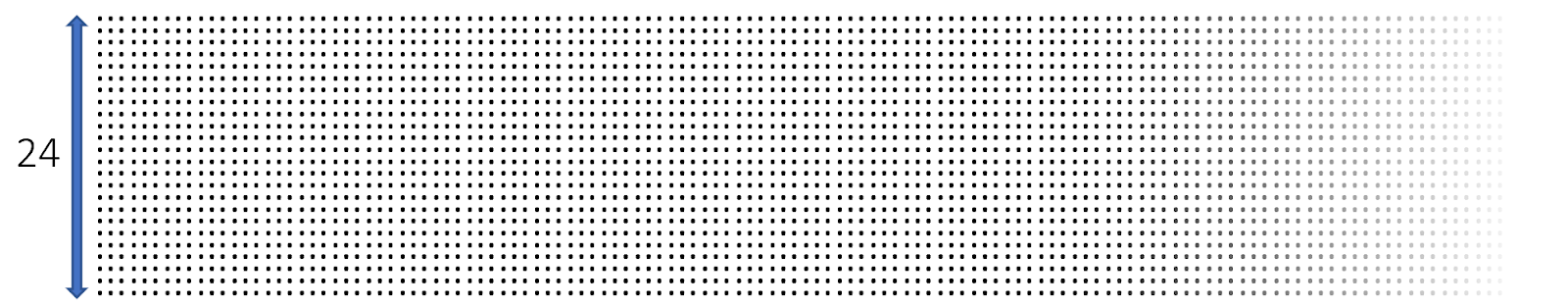

They fit onto a small image. Here are 1 million dots, so imagine two of these images:

1 Million Dots

2. Rearrange those dots so they’re in 24 long rows, about 85,000 dots wide

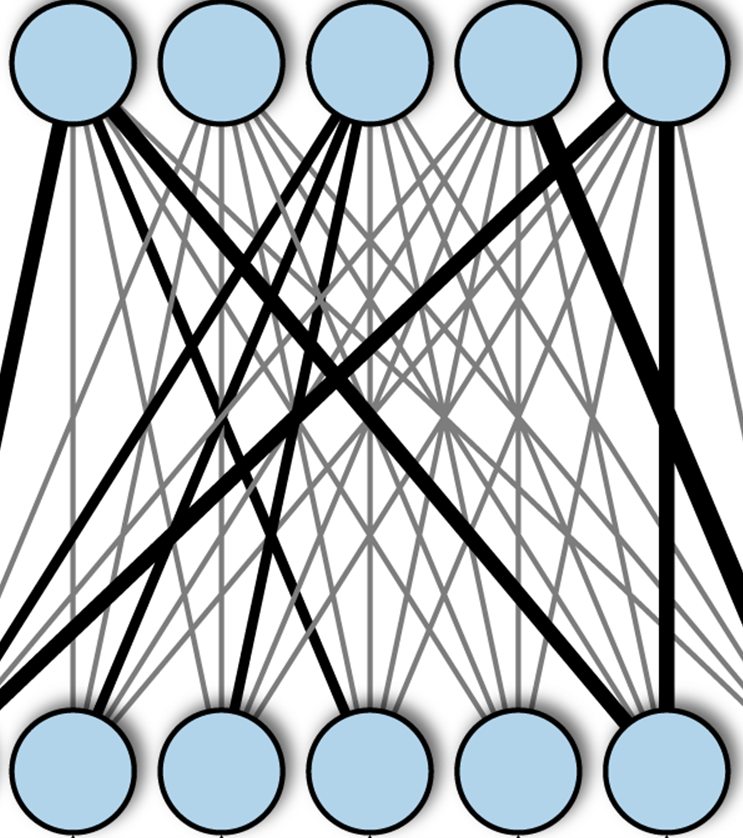

3. Now for each dot, connect it with every dot in the next row by virtual wires of varying thickness, like this:

A “Fully Connected” Neural Network

You will have ~175 billion wires. The thickness of those wires represents 175 billion numbers. Those are the “175 billion parameters”.

Those dots are all exactly the same – they “perform” incredibly simple math in an artificial neural network, taking the input from the previous layer and multiplying it by the parameters (wire thickness). The idea is to sort of approximate the way a simple biological neuron works: the wires represent the neural connections.

THAT’S IT.

I’m not kidding. That’s it.

There is no database. Not a single text file. No documents. No backup copy of Wikipedia. No internet connection. No folder full of manuals. Just 175 billion numbers, laid out like a weaved scarf. Just those numbers. It would fit on a 256GB USB stick with room to spare. That’s it.

All of the logic, the “intelligence”, the quotes, the humor, the names – all of the product manuals, the bible, the shakespeare… everything that informs ChatGPT’s output is encoded and added (by its learning process) into the layout above. That’s it.

“But Sam, doesn’t it also have a…?”

No. No it doesn’t. That’s it.

What about all the things I tell ChatGPT in the chat?

If you aren’t blown away yet that all of ChatGPT is just 175 billion numbers, wait until you hear this: your entire conversation with ChatGPT, no matter what, is represented by 2,048 numbers. No more.

When you “talk” to ChatGPT, it takes the letters you give it and encodes them into up to 2,048 values, and it puts those values into the front end of the network above. The output of the network is its response. If you enter more than 2,048 letters (like when you paste in a long article or a manual), it grabs the first 2,048 letters, runs it through the network to generate a ‘hidden state vector’ that is 2,048 numbers long, and combines it with the next 2,048 letters and runs it again (this is a slightly simplified explanation of the algorithm but not much).

That’s why ChatGPT doesn’t learn from your conversation. Every conversation is from scratch. Those 2,048 numbers change during a conversation. The 175 billion numbers don’t change no matter how much you talk to her. Those numbers only change during a ChatGPT upgrade.

What this means

This ranks ChatGPT among a category of “unimaginable complexity emergent from incredible simplicity” that we see in certain natural phenomena, and once in a while in computer science. Here are some other examples:

DNA

4 billion DNA nucleotides is 691 megabytes of data. That fits on a CD. It represents a human. Change enough numbers, you get a tyrannosaurus instead. Or a jellyfish. Or a rutabaga.

Fractals

Fractals in math and in nature take a simple mathematical equation and repeat it at progressively smaller scales, creating self-similar shapes that are infinitely complex. The simplicity of the equation belies the complexity of the shapes it generates.

Cellular automata

Cellular automata (examples here) illustrate how a simple set of rules can create a wide range of complex patterns and behaviors. By updating the state of each cell in a grid based on the states of its neighbors, cellular automata can produce intricate and dynamic patterns that can appear to be alive and seemingly intelligent.

Demoscene

An emergent subculture in computer graphics – demoscene is a category of tiny programs written by skilled experts. A program made up of 4 kilobytes (or smaller) generates gorgeous, complex, animated worlds.

The human brain – and beyond

For years, many of us struggled to believe that human memory could be encoded in neurons and neural connections (“there has to be something more!”)

In my view, seeing the emergent behavior from ChatGPT puts those objections to rest. Seeing ChatGPT in-action helps us overcome this and other innate biases and preconceptions that limit our understanding of how memory works (and how the human brain works in general.)

Follow me on LinkedIn – we’ll explore implications for brain research in future blog posts.

ChatGPT Full Interview – Part 2

(this is a continuation of a prior interview with ChatGPT about her inner workings)

Wait a minute – do you reference any other data at runtime besides your 175B parameters ?

As a language model, I do not reference any other data at runtime beyond my 175 billion parameters. My knowledge and understanding come from the data that was used to train me, and I can generate text based on that information. However, I don’t have access to the internet or any external data sources at runtime, so I can’t access new information or update my knowledge. My response is based solely on the information that was present at the time of my training, which has a cutoff of 2021.

There are no additional databases?

No, there are no additional databases that I reference at runtime. My knowledge and understanding come from the data that was used to train me, which is embedded within the model’s 175 billion parameters. This data includes a wide variety of text from the internet, books, articles, and other sources, allowing me to understand and generate text on a wide range of topics. However, I do not have access to any external databases or data sources at runtime, so I can’t access new information or update my knowledge.

How do you store the expanding context that arises from an ongoing conversation?

As a language model, I store the context of an ongoing conversation by maintaining a hidden state, which is a set of internal values that represent the current context of the conversation. The hidden state is updated with each new input, and it is used to generate the next output. This allows me to keep track of the context of the conversation, and generate responses that are relevant to the current topic.

The hidden state is a fixed size vector and the information about the conversation is stored in it by updating it in every time step. The hidden state is updated with the information from the current input, as well as the previous hidden state. This allows the model to maintain a representation of the context of the conversation, which it can use to generate more relevant and coherent responses.

It’s worth noting that as a language model, I don’t have long-term memory like humans, I can only keep track of the context of the conversation within the current session, if the session ends or the context change, my understanding of the previous conversation will be lost.

In summary, I store the context of an ongoing conversation by maintaining a hidden state, which is a set of internal values that represent the current context of the conversation. The hidden state is updated with each new input and it is used to generate the next output, this allows me to keep track of the context of the conversation, and generate responses that are relevant to the current topic, however, as a language model, I don’t have long-term memory like humans, I can only keep track of the context of the conversation within the current session.

How big is the hidden state?

The size of the hidden state in GPT-3 is typically 2048, but it can vary depending on the specific implementation and architecture of the model. The hidden state is a fixed size vector that contains a representation of the current context of the conversation. The information about the conversation is stored in it by updating it at every time step. The hidden state is updated with the information from the current input, as well as the previous hidden state. This allows the model to maintain a representation of the context of the conversation, which it can use to generate more relevant and coherent responses.

It’s worth noting that the size of the hidden state is a design parameter and it is based on trade-offs between computational cost and the ability to capture the context of the conversation. Larger hidden state size allows the model to capture more information about the conversation but it also increases the computational cost.

In summary, The size of the hidden state in GPT-3 is typically 2048, but it can vary depending on the specific implementation and architecture of the model. The hidden state is a fixed size vector that contains a representation of the current context of the conversation. The information about the conversation is stored in it by updating it at every time step. The hidden state is updated with the information from the current input, as well as the previous hidden state. This allows the model to maintain a representation of the context of the conversation, which it can use to generate more relevant and coherent responses.

Is the hidden state represented as a word embedding or similar? Or something else entirely?

The hidden state in GPT-3 is represented as a set of floating-point values. It is not represented as a word embedding, which is a fixed-size numerical representation of a word in a vocabulary, typically in a high-dimensional space.

The hidden state is a continuous representation, whereas the word embeddings are discrete representations. The hidden state is typically much larger than a word embedding and it contains more information. The hidden state is updated at every time step and it contains the information about the current context of the conversation.

The hidden state in GPT-3 is updated with the information from the current input, as well as the previous hidden state. This allows the model to maintain a representation of the context of the conversation, which it can use to generate more relevant and coherent responses.

In summary, the hidden state in GPT-3 is represented as a set of floating-point values, it is not represented as a word embedding. The hidden state is a continuous representation, whereas the word embeddings are discrete representations. The hidden state is typically much larger than a word embedding and it contains more information. The hidden state is updated at every time step and it contains the information about the current context of the conversation.

How many artificial neurons do you have ? I estimate around 2 million.

I am a transformer-based language model, and the architecture of my neural network is composed of layers of multi-head self-attention and feed-forward layers. The number of artificial neurons in my neural network is not directly comparable to the number of neurons in a biological brain, as the architecture and function of the two systems are fundamentally different.

In GPT-3, the number of artificial neurons is not fixed, it varies depending on the specific implementation and architecture of the model, but it can be in the order of millions. The number of artificial neurons in a neural network is mainly determined by the number of parameters in the model, and the number of parameters in GPT-3 is 175 billion.

It’s worth noting that the number of artificial neurons in a neural network is not the only metric that determines the model’s performance, other factors like the number of layers, the size of the hidden state, and the architecture of the model are also important.

In summary, I am a transformer-based language model, and the number of artificial neurons in my neural network is not directly comparable to the number of neurons in a biological brain, as the architecture and function of the two systems are fundamentally different. The number of artificial

Is the ChatGPT hidden state vector ever larger than 2048? When a user pastes in an entire API manual, the only state stored is 2048 values?

As a transformer-based language model, GPT-3 uses a fixed-size hidden state vector to maintain a representation of the context of the conversation. The size of the hidden state vector is typically 2048, but it can vary depending on the specific implementation and architecture of the model. When the input to the model is longer than the size of the hidden state vector, only the last 2048 values of the input are used to update the hidden state vector. This means that information from the earlier part of the input is not included in the hidden state vector and will not be used to generate the next output.

However, it’s worth noting that even though only the last 2048 values of the input are used to update the hidden state vector, the model’s attention mechanism allows it to access all the information from the input when generating the next output. This means that even if some information from the input is not included in the hidden state vector, the model can still use it to generate a relevant and coherent response.

It’s also worth noting that the model is designed to perform well on a wide range of tasks, and it’s not optimized for handling very large input, like an entire API manual, for this kind of input, the model may not perform as well as it does on a normal conversation.

In summary, the size of the hidden state vector in GPT-3 is typically 2048, when the input to the model is longer than the size of the hidden state vector, only the last 2048 values of the input are used to update the hidden state vector, however, the model’s attention mechanism allows it to access all the information from the input when generating the next output. The model is not optimized for handling very large input, like an entire API manual, and it may not perform as well as it does on a normal conversation.

When you are referring to input size, are you referring to the number of characters in the input text?

Yes, when I’m referring to input size, I’m referring to the number of characters in the input text. The input text is tokenized, which means that it is divided into words or subwords and then each token is encoded as a numerical value. The input text is then passed through the neural network, and the model generates a response based on the input.

In the case of GPT-3, the model is designed to process input text

Pingback: The Most Mindblowing Realization About ChatGPT | Level Ex